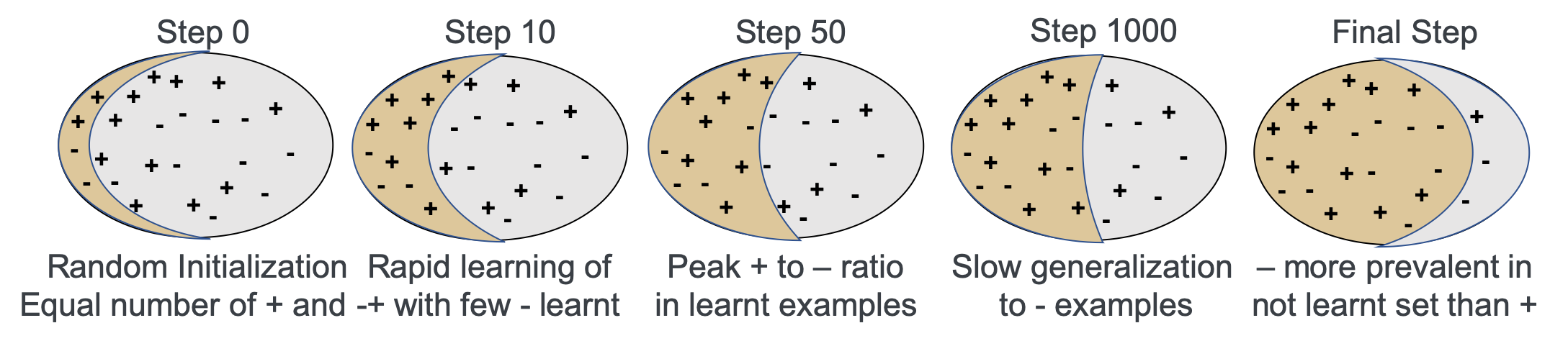

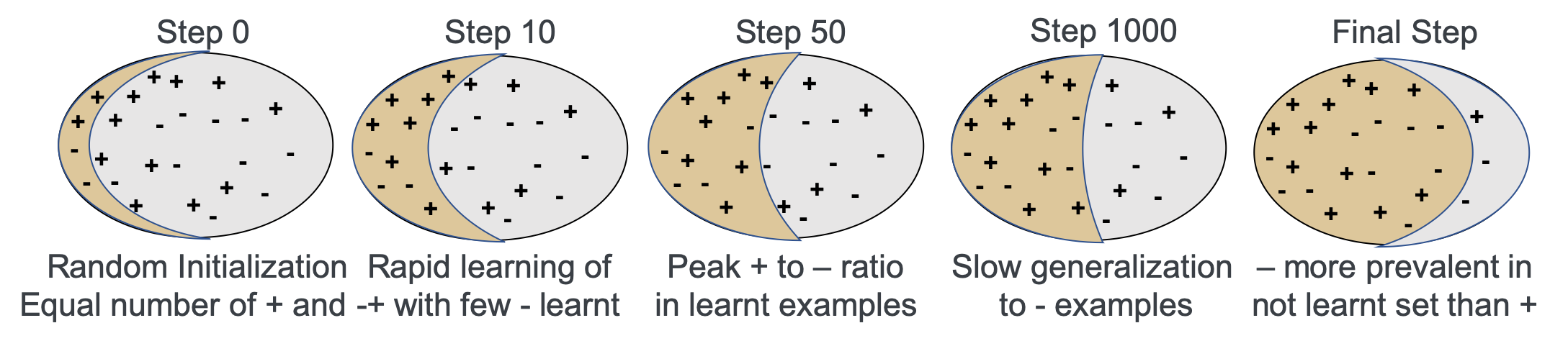

In this paper, we empirically investigate the training journey of deep neural networks relative to fully trained shallow machine learning models. We observe that the deep neural networks (DNNs) train by learning to correctly classify shallow-learnable examples in the early epochs before learning the harder examples. We build on this observation this to suggest a way for partitioning the dataset into hard and easy subsets that can be used for improving the overall training process. Incidentally, we also found evidence of a subset of intriguing examples across all the datasets we considered, that were shallow learnable but not deep-learnable. In order to aid reproducibility, we also duly release our code for this work on Github.